How and why I built How to upload large files to AWS S3 or server with nodejs

About me

I am Saurabh Patwal based out of Delhi (India). I work as a full stack developer.

The problem I wanted to solve

Uploading large files to AWS S3 is a tedious task to do, it basically stops responding or gives you timeout error.

What is How to upload large files to AWS S3 or server with nodejs?

Tech stack

We are using Nodejs v10+,express, AWS SDK, AWS S3.

The process of building How to upload large files to AWS S3 or server with nodejs

Adding files to nodejs server with express requires an additional middleware to handle files request comes with form data.

To handle files we generally used ### busboy https://www.npmjs.com/package/busboy .

To upload files to server an additional package is used to handle it. That is ### multer https://www.npmjs.com/package/multer

Also additional package is required to upload it to AWS S3 https://www.npmjs.com/package/multer-s3 with https://www.npmjs.com/package/aws-sdk

Challenges I faced

But the problem with general packages like multer and multerS3 is that, it can be used to upload small size files (less than 50MB).

To upload large files we need to create our own custom module.

Key learnings

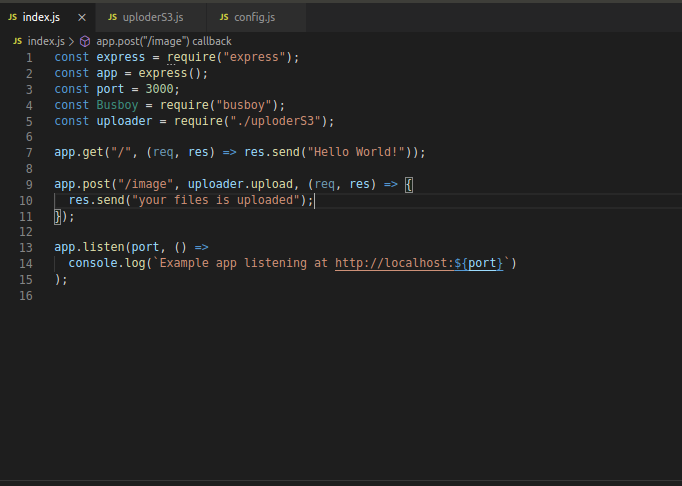

Creating an express server

```

const express = require("express");

const app = express();

const port = 3000;

const Busboy = require("busboy");

const uploader = require("./uploderS3");

app.get("/", (req, res) => res.send("Hello World!"));

app.post("/image", uploader.upload, (req, res) => {

res.send("your files is uploaded");

});

app.listen(port, () =>

console.log(Example app listening at http://localhost:${port})

);

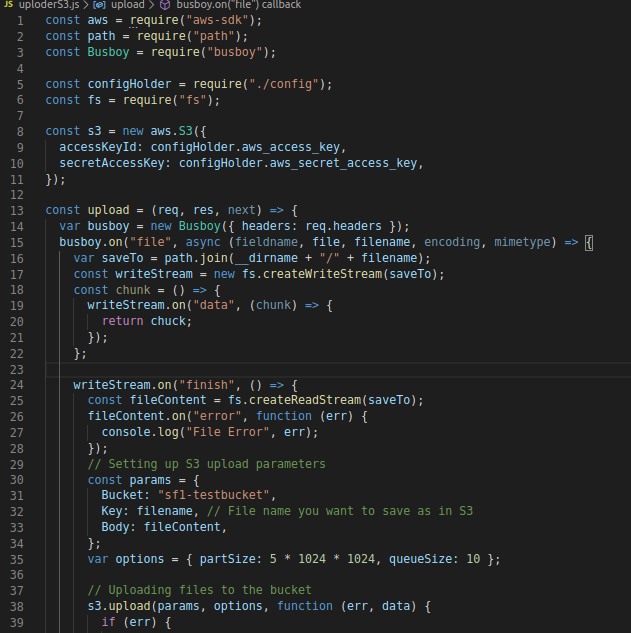

### Creating your own custom uploader to S3

const aws = require("aws-sdk");

const path = require("path");

const Busboy = require("busboy");

const configHolder = require("./config");

const fs = require("fs");

const s3 = new aws.S3({

accessKeyId: configHolder.aws_access_key,

secretAccessKey: configHolder.aws_secret_access_key,

});

const upload = (req, res, next) => {

var busboy = new Busboy({ headers: req.headers });

busboy.on("file", async (fieldname, file, filename, encoding, mimetype) => {

var saveTo = path.join(__dirname + "/" + filename);

const writeStream = new fs.createWriteStream(saveTo);

const chunk = () => {

writeStream.on("data", (chunk) => {

return chuck;

});

};

writeStream.on("finish", () => {

const fileContent = fs.createReadStream(saveTo);

fileContent.on("error", function (err) {

console.log("File Error", err);

});

// Setting up S3 upload parameters

const params = {

Bucket: "sf1-testbucket",

Key: filename, // File name you want to save as in S3

Body: fileContent,

};

var options = { partSize: 5 * 1024 * 1024, queueSize: 10 };

// Uploading files to the bucket

s3.upload(params, options, function (err, data) {

if (err) {

console.log("error ", err);

throw err;

}

console.log(`File uploaded successfully. ${data.Location}`);

fs.unlink(saveTo, (err) => {

throw new Error(err);

});

}).on("httpUploadProgress", function (evt) {

console.log(

"Completed " +

((evt.loaded * 100) / evt.total).toFixed() +

"% of upload"

);

});

});

file.pipe(writeStream);

});

busboy.on("finish", function () {

res.writeHead(200, { Connection: "close" });

res.end("Upload finished");

});

return req.pipe(busboy);

};

module.exports = uploader = {

upload,

};

## Final thoughts and next steps