Understanding Android Camera Architecture - Part 1

Android is the most popular mobile operating system in the market today. Towards the end of the last decade, the camera has emerged as one of the most important factors that contribute towards smartphone sales and different OEMs are trying to stay at the top of the throne. Smartphone cameras are built very similar to digital cameras in a compact package. With high-end processing capabilities it has taken a big leap and is competing with digital cameras today. In this post, I’ll be covering the general hardware architecture of modern Android Camera.

This is a repost of my original article at blog.minhazav.dev/android-camera-hardware-explained posted on 31st Jan 2020. Read the full article there.

Figure: Android camera low-level architecture (source). Many of the basic steps are done in specialised hardware explained in detail below.

Components

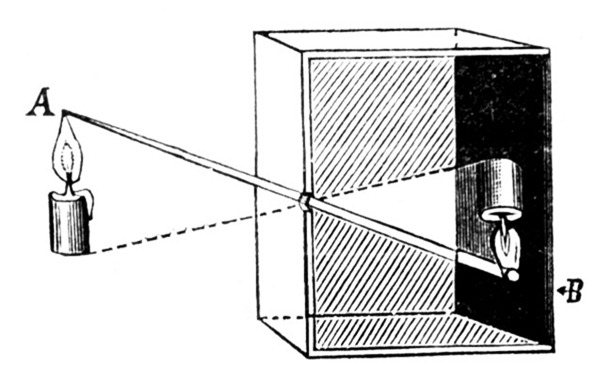

Figure: Pin hole camera.

Around 400BC to 300BC, ancient philosophers of certain cultures were some of the first peoples to experiment with the camera obscura design for creating images. The idea is simple enough — set up a sufficiently dark room with only a tiny bit of light entering through a pinhole opposite a flat plane. The light travels in straight line, crosses at the pinhole, and creates an image on the flat plane on the other side. The result is an upside-down version of the objects being beamed in from the opposite side of the pinhole—an incredible miracle, and an amazing scientific discovery for people that lived more than a millennium before the “middle ages.”

1000 years later cameras have taken a big leap and now tiny cameras are available on smartphones capable of capturing dense images competing with full-fledged digital cameras. A standard camera on the smartphone has the following components:

Legends

HAL: Hardware Access Layer

ISP: Image Signal Processor

DSP: Digital Singal Processor

3A: Auto Exposure, Auto Focus, Auto White-Balance

SOC: System on a Chip

OIS: Optical Image Stabilization

Lens

The task of the lens is to focus the incoming light on the sensor.

- The horizontal movement of the lens allows the change in focus.

- Some camera chassis allow vertical or multi-axis movement of the lens (some times sensors) against the movement of the camera, on the whole, to provide stabilization against sudden movements. This is called Optical Image Stabilization (OIS). It helps is capturing less blurry or stable video.

- The aperture is used to control the amount of light that enters the sensor is usually part of the lens. Smartphones usually come with a fixed aperture lens. To provide functionalities similar to digital cameras that come with variable aperture lens, smartphones these days come with multiple lenses.

Shutter

Shutter speed is one of the factors that control how much light is sensed by the sensor or for how long. This has traditionally been controlled by a mechanical component called shutter.

Given that smartphones are very tiny devices, it shouldn’t be any surprise that the last mechanical camera part before the sensor — the shutter has been omitted from their designs. Instead, they use what’s called an electronic shutter (E-shutter) to expose your photos. Essentially, your smartphone will tell the sensor to record your scene for a given time, recorded from top to bottom. While this is quite good for saving weight, there are tradeoffs. For example, if you shoot a fast-moving object, the sensor will record it at different points in time (due to the readout speed) skewing the object in your photo.

Sensor

Light travels through a lens, the shutter opens, and a moment is preserved by capturing it on the camera’s sensor.

The basic building blocks of an image sensor are photodiodes which perform the conversion of light.

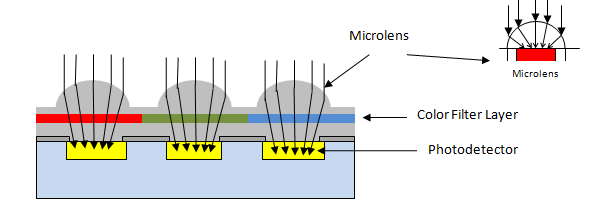

Figure: Basic image sensor.

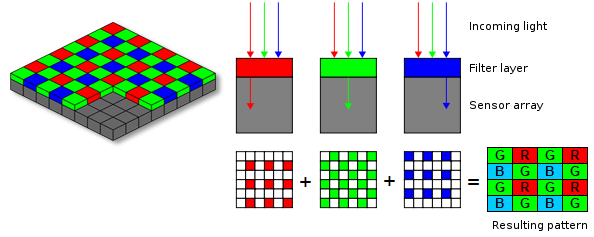

As the camera’s sensor is photosensitive, when light photons are collected in the photosites (one photosite for each pixel), a tiny electrical charge is produced. Usually, the photodiodes employed in an image sensor are color-blind by nature, because they can only record shades of gray. To get color into the picture, as shown in Figure above, they are covered with a filter on top. This filter is usually a palette like red, green and blue (RGB) according to the pattern designated by the Bayer filter. The color image sensor uses the Bayer filter to output the RAW Bayer image.

Usually, the pattern of color filters is arranged in such a way where half of the total number of pixels are green (G), while a quarter of the total number is assigned to both red (R) and blue (B), the reason is a human eye is more sensitive to green color. When the image sensor is read line by line, the pixel sequence comes out as GRGRGR, etc., and then the alternate line sequence is BGBGBG, etc. as shown in the below diagram.

Figure: Bayer Transformation.

The raw output produced from Bayer-filter cameras is referred to as a Bayer image. This image is then further processed using the ISP pipeline to achieve quality pictures with all the necessary corrections applied.

Types of sensors used poplularly

- CCD (Charge-Coupled Device): In CCD type of image sensor, when light strikes the chip, it is held as a small electrical charge in each photo sensor. The charges in the line of pixels nearest to the output amplifiers are amplified and converted to an output, followed by each line of pixels shifting its charges one line closer to the amplifier.

- CMOS (Complementary Metal Oxide Semiconductor): A CMOS imaging chip is a type of image sensor that uses several transistors to amplify each pixel instead of a few CCD amplifiers and move the charge using more traditional wires. It uses red, green, and blue color filters and it uses red, green, and blue color filters and passes data through metal wiring and onto photodiodes. Most modern sensors are of the CMOS variety.

Nearly all camera phones use CMOS active-pixel image sensors (CMOS sensors), due to largely reduced power consumption compared to charge-coupled device (CCD) type cameras, which are also used, but in few camera phones. Some of the camera phones even use more expensive CMOS back-illuminated sensors, which uses less energy than CMOS, although more expensive than CMOS and CCD.

Flash

A flash is a device used in photography producing a flash of artificial light (typically 1/1000 to 1/200 of a second) at a color temperature of about 5500 K to help illuminate a scene. A major purpose of a flash is to illuminate a dark scene.

Figure: Image exposed without additional lighting (left) and with fill flash (right).

Flash is syncronized with the photo capture process typically one during the 3A process and other before the capture to get optimal exposure. During video capture flash is usually used as a torch. These days with the advancement in Night Mode algorithms which are based on high exposure, noise reduction, multi-frame merge and ML algorithms use of flash is getting less popular.

More

I'll be writing more about the remaining hardwares in my next article on CodeMentor soon, for now you can read them on my blog: