The Need, Promise, and Reality of Quantum Computing

Despite giving us the most spectacular wave of technological innovation in human history, there are certain computational problems that the digital revolution still can’t seem to solve. Some of these problems could be holding back key scientific breakthroughs, and even the global economy. Although conventional computers have been doubling in power and processing speed nearly ever two years for decades, they still don’t seem to be getting any closer to solving these persistent problems. Want to know why? Ask any computer scientist, and they’ll probably give you the same answer: today’s digital, conventional computers are built on a classical, and very limited, model of computing. In the long run, to efficiently solve the world’s most persistent computing problems, we’re going to have to turn to an entirely new and more capable animal: the quantum computer.

Ultimately, the difference between a classical computer and a quantum computer is not like the difference between an old car and a new one. Rather, it’s like the difference between a horse and a hawk: while one can run, the other can fly. Classical computers and quantum computers are indeed that different. Here we take a good look at where the key difference lies, and take a deep dive into what makes quantum computers unique. However, what you won’t find here is a final explanation for how quantum computers ultimately work their magic. Because no one really knows.

The hard limits of classical computing

Moore’s law, Shmore’s Law

For several decades now, the sheer speed and computational power of conventional computers has been doubling every two years (and by some accounts just eighteen months). This is known as Moore’s law. Although the breakneck pace of progress may have finally begun to slow slightly, it’s still more or less true that the room-filling supercomputer of today is the budget laptop of tomorrow. So at this rate, it seems reasonable to assume that there is no computational task that a conventional computer couldn’t eventually tackle in the foreseeable future. Nonetheless, unless we’re talking trillions of years (and then some), that’s simply not a safe assumption when it comes to certain stubborn tasks.

The conventional computer’s Achilles heel

The fact is that a computational task such as quickly finding the prime factors for very large integers is probably out of reach for even the fastest conventional computers of the future. The reason behind this is that finding the prime factors of a number is a function that has exponential growth. What’s exponential growth? Well let’s dive into it because this is a very important piece for understanding why quantum computers have so much potential and why classical computers fall short.

Quick introduction to exponential growth

Some things grow at a consistent rate and somethings grow faster as the number of “things” you have also grows. When growth becomes more rapid (not constant) in relation to the growing total number, then it is exponential.

Exponential growth is extremely powerful. One of the most important features of exponential growth is that, while it starts off slowly, it can result in enormous quantities fairly quickly — often in a way that is shocking.

This definition can be a bit hard to get your head around without an example, so let’s dive into a quick story.

There is a legend in which a wise man, who was promised an award by a king, asks the ruler to reward him by placing one grain of rice on the first square of a chessboard, two grains on the second square, four grains on the third and so forth. Every square was to have double the number of grains as the previous square. The king granted his request but soon realized that the rice required to fill the chessboard was more than existed in the entire kingdom and would cost him all of his assets.

Exponential Growth of Rice:

The number of grains on any square reflects the following rule, or formula:

In this formula, k is the number of the square and N is the number of grains of rice on that square.

- If k = 1 (the first square), then N = 2⁰, which equals 1.

- If k = 5 (the fifth square), then N = 2⁴, which equals 16.

This is exponential growth because the exponent, or power, increases as we go from square to square.

To conceptualize this further, I’ve included a graph of what exponential growth looks like in relation to the input quantity of an exponential function.

As you can see, the function starts relatively slow, but soon shoots up to numbers that no classical computer would be able to compute with large enough input sizes.

Real exponential functions have real consequences

Okay, enough story telling. Let’s move on to real world exponential problems like the one we were talking about earlier. Prime Factorization.

Take the number 51. See how long it takes you to find the two unique prime numbers that you can multiply together to generate it. If you’re familiar with these kinds of problems, it probably only took you a few seconds to find that 3 and 17, both primes, generate 51. As it turns out, this seemingly simple process, lies at the heart of the digital economy and is the basis for our most secure types of encryption. The reason we use this technique in encryption is because as the numbers used in prime factorization get larger and larger, it becomes increasingly difficult for conventional computers to factor them. Once you reach a certain number of digits, you find that it would take even the fastest conventional computer months, years, centuries, millennia, or even countless eons to factor it.

With this idea in mind, even if computers continue to double in processing power every two years for the foreseeable future (and don’t bet on it), they will always struggle with prime factorization. Other equally stubborn problems at the heart of modern science and mathematics include certain molecular modeling and mathematical optimization problems which promise to crash any supercomputer that dares to come anywhere near them.

Below is a great illustration from IBM Research that shows the most complex molecule (F cluster) that we can simulate on our the worlds most powerful super computer. As you can see (in the bottom left of the image), the molecule is not very complex at all, and if we want to model more complex molecules to discover better drug treatments and understand our biology, then we will need a different approach!

Molecular Simulation Problem. Source: IBM Research

Enter the quantum computer

Conventional computers are strictly digital, and rely purely on classical computing principles and properties. Quantum computers, on the other hand, are strictly quantum. Accordingly, they rely on quantum principles and properties — most importantly superposition and entanglement — that make all the difference in their almost miraculous capacity to solve seemingly insurmountable problems.

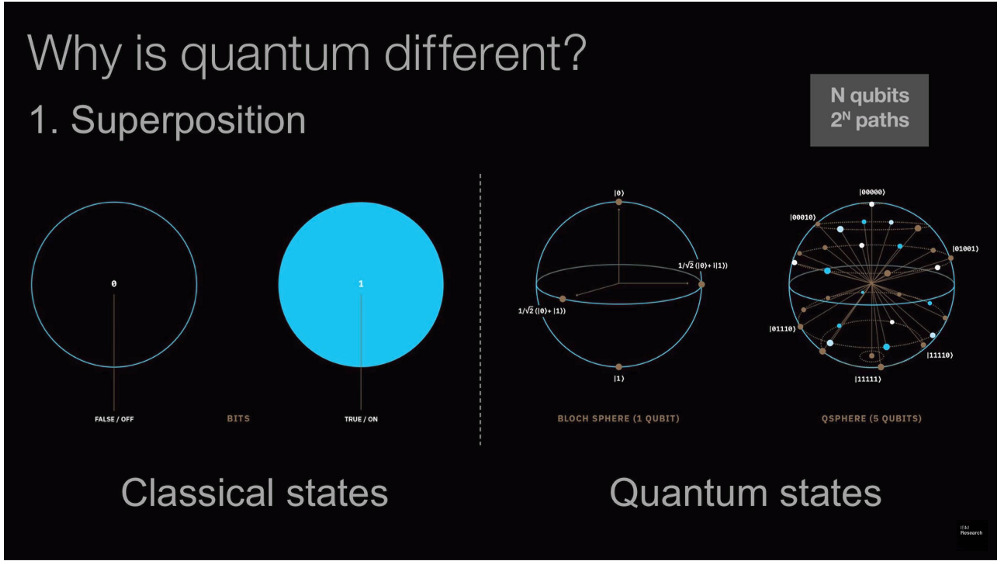

Superposition

To make sense out of the notion of superposition, let’s consider the simplest possible system: a two-state system. An ordinary, classical two-state system is like an On/Off switch that is always in one state (On) or another (Off). Yet a two-state quantum system is something else entirely. Of course, whenever you measure its state, you will find that it is indeed either on or off, just like a classical system. But between measurements, a quantum system can be in a superposition of both on and off states at the same time, no matter how counter-intuitive, and even supernatural, this may seem to us.

Superposition. Source: IBM Research

Generally speaking, physicists maintain that it’s meaningless to talk about a quantum system’s state, such as its spin, prior to measurement. Some even argue that the very act of measuring a quantum system causes it to collapse from a murky state of uncertainty to the value (On or Off, Up or Down) that you measure. Although probably impossible to visualize, there’s no escaping the fact that this mysterious phenomenon is not only real, but gives rise to a new dimension of problem-solving power that paves the way for the quantum computer. Keep the idea of superposition in mind. We will come back to how this is used in quantum computing in a bit.

How superposition is even possible is beyond the scope of this article, but trust that it has been proven to be true. If you want to understand what gives rise to superposition then you are going to first need to understand the idea of Wave/Particle Duality.

Entanglement

Okay, on to the next property of quantum mechanics which we need to leverage to create a quantum computer.

It is known that once two quantum systems interact with one another, they become hopelessly entangled partners. From then on, the state of one system will give you precise information about the state of the other system, no matter how far the two are from one another. Seriously, the two systems can be light years apart and still give you precise and instantaneous information about each other. Let’s illustrate this with a concrete example as this caused even Einstein to puzzle about how this could be possible. (Einstein famously referred to this phenomenon as “Spooky action at a distance”)

Quantum Entanglement. Source: IBM Research

Suppose you have two electrons, A and B. Once you have them interact in just the right way, their spins will automatically get entangled. From then on, if A’s spin is Up, B’s spin will be Down, like two kids on a seesaw, except that this holds true even you take A and B to opposite ends of the Earth (or the galaxy, for that matter). Despite the thousands of miles (or light years) between them, it’s been proven that if you measure A to have spin Up, you will know instantly that B’s spin is Down. But wait: we’ve already learned that these systems don’t have precise values for states such as spin, but rather exist in a murky superposition, prior to measurement. So does our measuring A actually cause B to instantaneously collapse to the opposite value, even when the two are light years apart? If so, then we have yet another problem on our hands, because Einstein taught us that no causal influence, such as a light signal, between two systems can travel faster than the speed of light. So what gives? All told, we honestly don’t know. All we know is that quantum entanglement is real, and that you can leverage it to work wonders.

The qubit

The qubit plays the same role in quantum computing as the bit does in classical computing: its the fundamental unit of information. However, compared to a qubit, a bit is downright boring. Although both bits and qubits generate one of two states (a 0 or a 1 ) as the outcome of a computation, a qubit can simultaneously be in both 0 and 1 states prior to that outcome. If this sounds like quantum superposition, it is. Qubits are quantum systems par excellence.

Just as conventional computers are built bit by bit with transistors that are either On or Off, quantum computers are built qubit by qubit with electrons in spin-states that are either Up or Down (once measured, of course). And just as transistors in On/Off states are strung together to form the logic gates that perform classical computations in digital computers, electrons in Up/Down spin-states are strung together to form the quantum gates that perform quantum calculations in quantum computers. Yet stringing together individual electrons (while preserving their spin states) is far, far easier said than done.

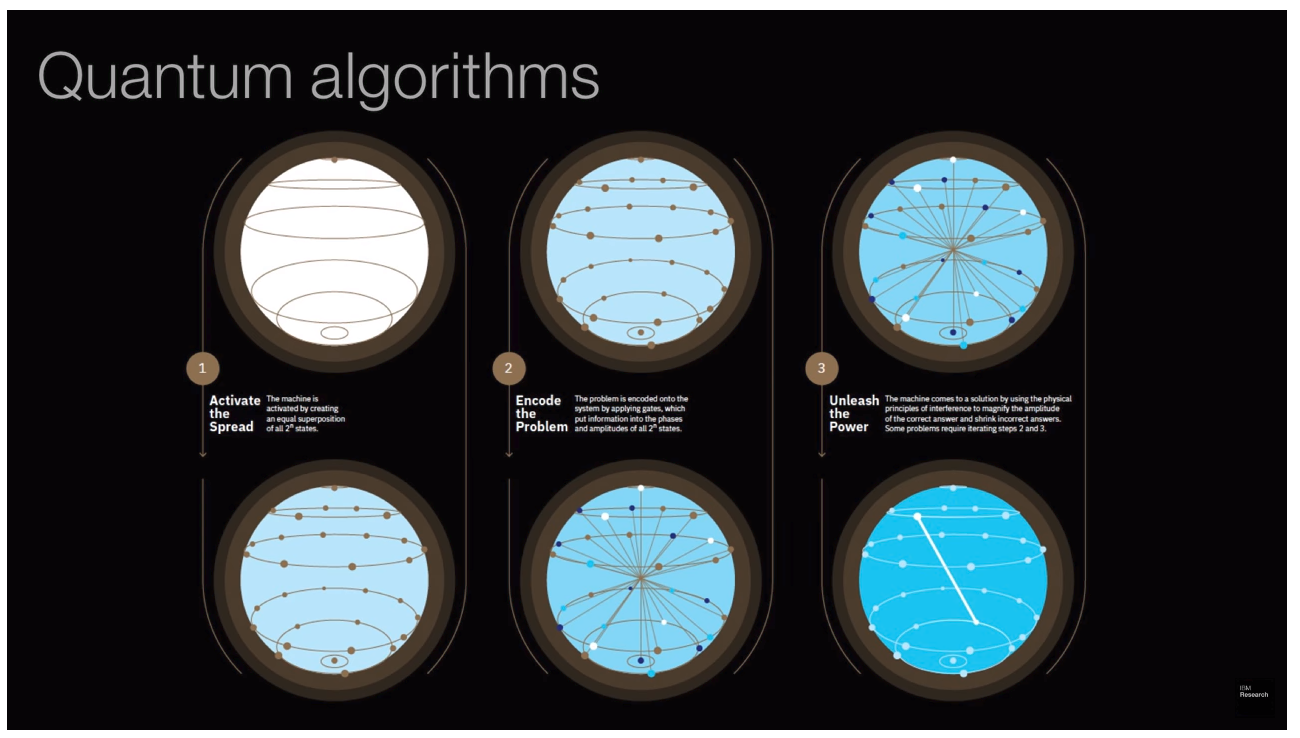

Quantum Algorithms. Source: IBM Research

Where are we today?

While Intel is busy pumping out conventional chips with billions of transistors a piece, the world’s leading experimental computer scientists are still struggling to build a quantum computer “chip” with more than a handful of qubits. Just to give you a sense of how early we are in the history of quantum computing, it was a big deal when recently IBM unveiled the largest quantum computer in the world with an astonishing… wait for it… 50 qubits. Nonetheless, it’s a start, and if anything like Moore’s law applies to quantum computers, we should get into the hundreds in a few years, and the thousands in a few more. A billion? I wouldn’t hold your breath, but then again, you don’t need a billion qubits to outperform the daylights out of a conventional computer in some key categories, such as prime categorization, molecular modeling and a slew of optimization problems that no conventional computer can touch today.

The quantum computers of 2018

All the same, as of right now, nearly every quantum computer is a multi-million dollar borderline mad-scientist project that looks the part. You generally find them in R&D departments at large IT companies like IBM, or in the experimental physics wing of large research universities, like MIT. They have to be super-cooled to a hair above absolute zero (that’s colder than intergalactic space), and experimenters need to use microwaves of a precise frequency to communicate with each qubit in the computer individually. Needless to say, that doesn’t scale. But neither did the vacuum tubes of the earliest conventional computers, so let’s not judge this first generation too harshly.

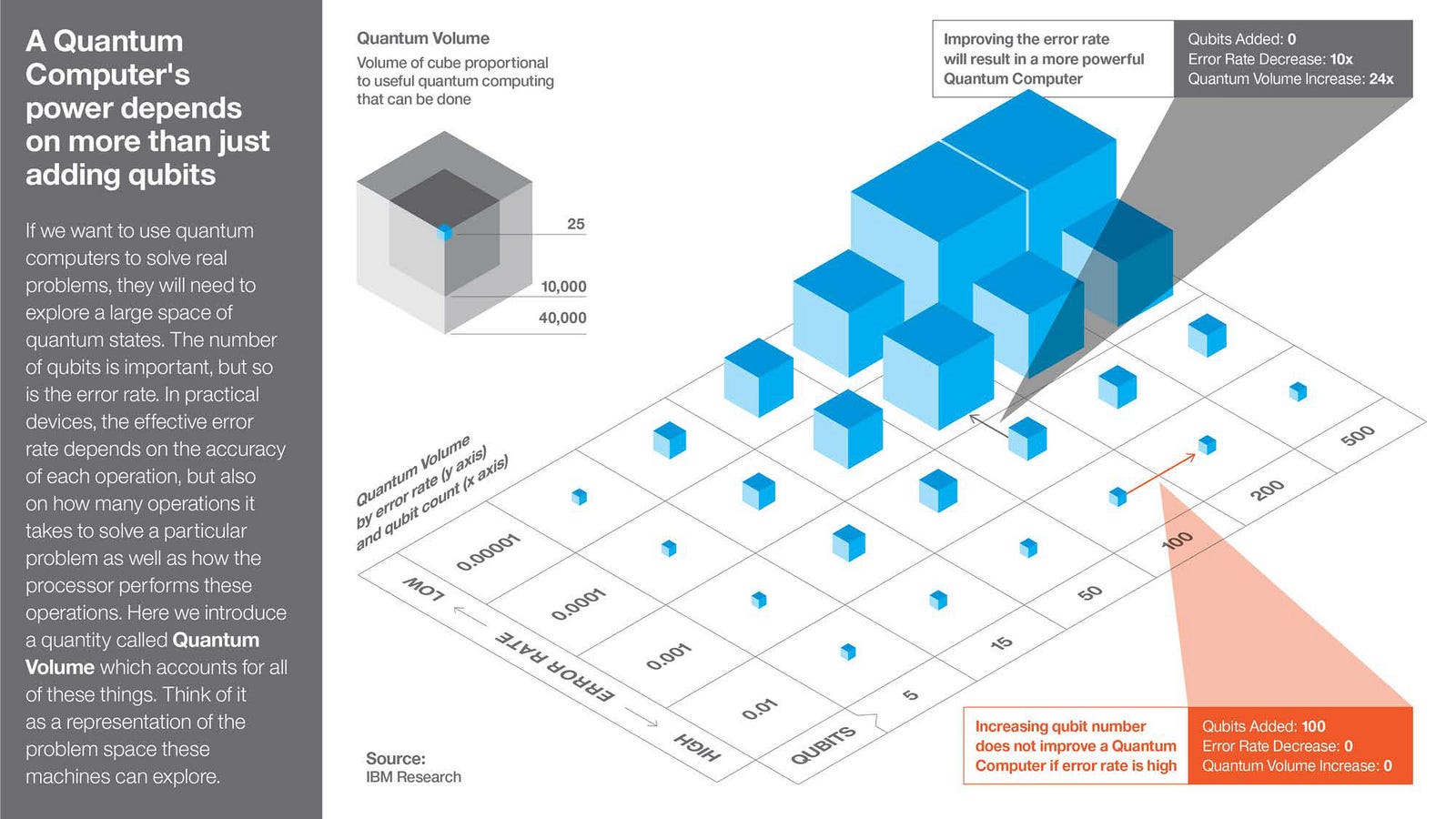

Roadblocks awaiting breakthroughs

The primary reason that quantum computers haven’t gone mainstream yet is that the best minds and inventors in the world are still struggling with high error rates, and low numbers of qubits. As we address these two problems together, we will rapidly increase what IBM calls each computers’ “quantum volume,” a way of visualizing the sheer quantity of useful calculations a quantum computer can perform.

Quantum Volume. Source: IBM Research

In short, for quantum computing to take off and quantum-powered Macbooks to start flying off the shelves, we need far more qubits and far fewer mistakes. That’s going to take time, but at least we know what we’re aiming for, and what we’re up against.

Myths vs explanations

Although we know that quantum computers can easily do things that no conventional computer can dream of doing, we don’t really know how they do it. If this sounds surprising, given that the first-generation of quantum computers already exists, keep in mind the word quantum. We’ve been using quantum mechanics to solve problems for a century now, and we still don’t really know how it works. Quantum computing, as a member of the quantum family, is in the same boat. Michael Nielsen (who basically wrote the book on the subject), has argued convincingly that any explanation of quantum computing is destined to miss the mark. After all, according to Nielsen, if there were a straightforward explanation for how a quantum computer works (that is, something you could visualize), then it could be simulated on a conventional computer. But if it could be simulated on a conventional computer, then it couldn’t be an accurate model of a quantum computer, because a quantum computer by definition does what no conventional computer can do.

According to Nielsen, the most popular myth that pretends to explain quantum computation is called quantum parallelism. Because you’re going to hear the quantum parallelism story a lot, let’s look at it for a moment. The basic idea behind quantum parallelism is that quantum computers, unlike their conventional counterparts, explore the full spectrum of possible computational outcomes/solutions simultaneously (i.e. in a single operation), while digital computers must plod along, looking at each solution in sequence. According to Nielsen, this part of the quantum-parallelism story is roughly right. However, he sharply criticizes the rest of the story, which goes on to say that after surveying the full spectrum of solutions, quantum computers pick out the best one. Now that, according to Nielsen, is a myth. The truth, he insists, is that what quantum computers, like all quantum systems, are really doing behind the scenes is entirely out of our reach. We see the input, and the output, and what happens in between is sealed in mystery.

If you liked this article I would be super excited if you hit the clap button  or share with your curious friends. I’ve got much more like it over at my personal blog (jasonroell.com) or you can just subscribe to my medium profile and get all my articles sent to you as soon as I write them! (how awesome?!)

or share with your curious friends. I’ve got much more like it over at my personal blog (jasonroell.com) or you can just subscribe to my medium profile and get all my articles sent to you as soon as I write them! (how awesome?!)

Anyway, thanks again for reading have a great day!