Computer Vision and Why It is so Difficult

The most difficult things to understand are the ones that are fundamental. We live in a visual world. We see things and instantly understand what they are and what we can do with them. We can not only identify them but also understand their particular attributes and the classification they belong in. Without thinking too deeply about it we can look at a book and understand the process of the idea, the thinking, the writing, the writer, the agent, the editor, the publishing house, the printer, the marketer, the salesman, the bookshop, the economy. And this is a far from exhaustive list of association that revolve around a book.

Even more to the point we can each look at a book and understand what it is and what it does even if we have not all got the same nuanced understanding of what goes into its making and what happens around it. Our depth of knowledge (or lack of it) will only play a role in specific contexts (like a book convention or a forum on the economy) but for most everyday purposes a number of us that is sufficiently large to be called the majority, will be able to ascribe most of the key attributes that make the book into an entity.

A book may be a great read if it is well written but in the cases where this is not the case it also makes a great doorstop.

So vision, really, is a knowledge thing as opposed to an eye thing and this is where things get very interesting. Knowledge is based on what is accepted of the real world and that includes both factual and imaginary things. We can all agree, for instance, on who Harry Potter is what he did and why he did it while we all also agree that he is an imaginary being. This means that in order to understand what we see we do not just use deductive reasoning whereby what we reach as a conclusion is 100% true, we also use inductive reasoning where we extrapolate from premises that are probably true and reach conclusions that are likely: “A book may be a great read if it is well written but in the cases where this is not the case it also makes a great doorstop.”

In that last sentence we imagine not only instances of success and failure but also a world where wit and sarcasm play a part in describing quality. Computers can be equipped with hardware that captures data (as in light in this case) in a far broader spectrum than our organic eyes, and they can also be equipped with algorithms that do such a marvelous job of interpreting this data that just by studying patterns of light they can learn to see around corners. Computers can also be equipped to perform deductive reasoning.

In a paper presented at the 10th International Conference on Artificial General Intelligence, AGI 2017, held in Melbourne, Australia, Army Laboratory researcher Douglas Summers Stay presented his paper on “deductive reasoning on a semantically embedded knowledge graph” with an abstract that read: “Representing knowledge as high-dimensional vectors in a continuous semantic vector space can help overcome the brittleness and incompleteness of traditional knowledge bases. We present a method for performing deductive reasoning directly in such a vector space, combining analogy, association, and deduction in a straightforward way at each step in a chain of reasoning, drawing on knowledge from diverse sources and ontologies.” — reasoning using a combination of “analogy, association, and deduction” and “drawing on knowledge from diverse sources and ontologies.” is exactly what semantic search is designed to do and notice how Stay refers to “a continuous semantic vector space” which mirrors the way the brain achieves infinite storage capacity in a finite space.

Image from US7519200B2 awarded to Google Inc shows the variables that must be recognized in order to assign a high degree of accuracy to the reading of a face by a computer.

So, now it sounds like we have the problem virtually solved. Yes and no. Yes in that search has become very good at understanding objects in images (and sometimes, video) and building an index which when coupled to its knowledge base for context can create a pretty good sense of search being intelligent. Two Google patents in particular point out just how this is done in a way that make it appear seamless.

Both of these highlight the issue that is the major stumbling block: namely context and, by association, inductive reasoning. Because knowledge constantly evolves and morphs into analogies that contain meaning but make no sense (like my example above of using a badly written book as a doorstop) computer logic stumbles on inductive reasoning when it is unsupervised and this, also affects, computer vision, at least, where specific contexts are involved. So, it may be OK to have a self-driving vehicle where the on-board telemetry provides a system of vision that is superior to anything a human could bring to bear (think of a car that could ‘see’ through fog, know what’s coming around corners and can even tap into traffic sensors as well as traffic reports to optimize the journey) but a robot nanny is more problematic (because children are inherently unpredictable and in their unpredictability lie risks to their safety).

Inductive Reasoning and Semantic Search

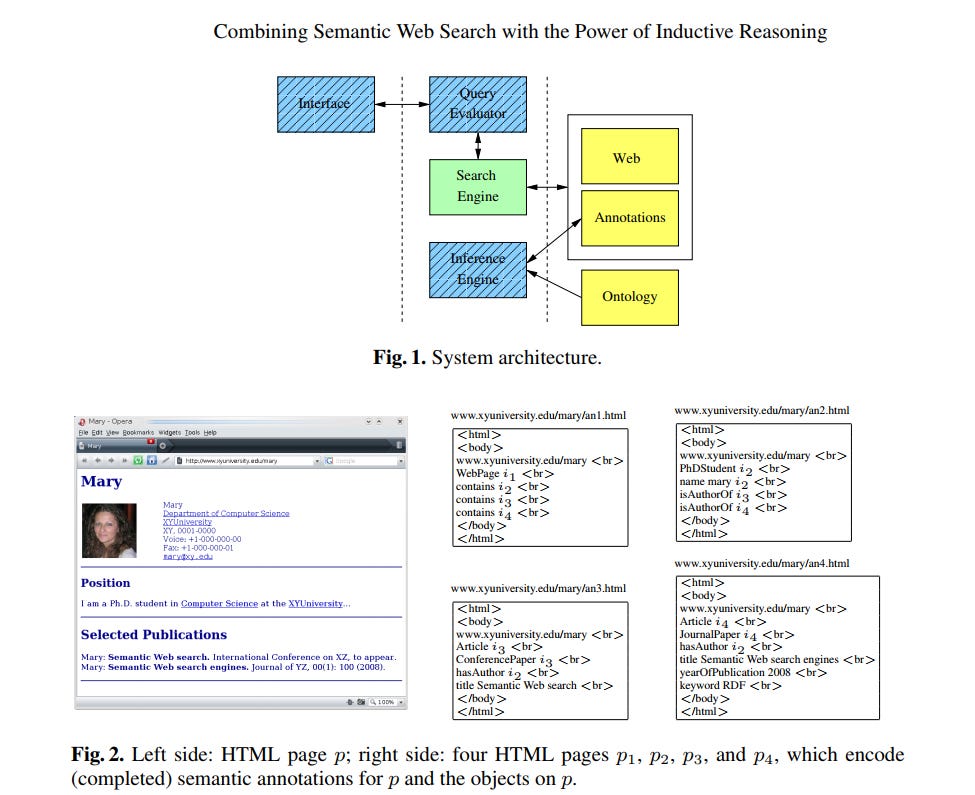

One technique that shows some promise comes out of Oxford University’s Computing Laboratory and innovatively employs inductive computer reasoning based upon probable outcomes and correlations and semantic search techniques to deliver greater accuracy in information retrieval in high-uncertainty contexts.

Everything is made of data and data is subject to the four vectors of Volume, Velocity, Variety and Veracity that I covered in detail in my book on Google Semantic Search. Each of these four vectors presents its own challenges that are further exacerbated by the ever increasing volume of data we place online.

In computer vision (and object recognition in search) we demand a higher standard than we would with a human. Mistakes made by machines undermine our trust in them because, unlike with humans we cannot usually see how they failed. As a result their failure becomes generalized and we perceive a machine (whether it is a search algorithm or a robot) to be fundamentally flawed. With humans we usually understand failure because we can model their performance on our own parameters of knowledge, memory and skill. Failures then become acceptable because we fully understand the limits of human capability.

The development of artificial intelligence in both the specific and the general sense is closely tied to cracking the computer vision challenge. Vision, after all, truly is mostly mental.

My latest book: The Sniper Mind: Eliminate Fear, Deal with Uncertainty, and Make Better Decisions is a neuroscientific study into how to apply practical steps for better decision making.