An Introduction to Docker by Instructor of O’Reilly’s Docker Tutorial

This article will introduce you to what is Docker, how Docker works, and some basic terminology such as containers, images, and dockerfiles. It is based on the Codementor Office Hours hosted by the author of the O’Reilly Docker video course, Andrew T. Baker.

What is Docker?

Docker started off as a side project at dotCloud, a Platform-as-a-Service company often compared to Heroku. I think it’s really cool how their business took off after making Docker their main focus.

Some people think Docker may be some virtualization tool like VMWare or VirtualBox. Some people think it’s a VM manager that helps you manage Virtual Machines like Vagrant. Some people think it’s a configuration management tool much like Chef, Ansible, or Puppet. There are other words that kind of float around the Docker ecosystem, such as Cgroups, LXC, Libvirt, Go, and so on.

However, when I’m trying to explain Docker to people who are new to it, I try to tell them it’s in its own category, so it’s not comparible to any existing tool.

If you go to Docker.com, you’ll see in its description:

Docker is an open platform for developers and sysadmins to build, ship, and run distributed applications.

It’s sort of difficult to tell what Docker is from that sentence alone, but I’d boil it down to saying that Docker is simply a new and different way to run & deploy software applications.

There are two big pieces to Docker: The Docker Engine , which is the Docker binary that’s running on your local machine and servers and does the work to run your software. The Docker Hub, which is a website and cloud service that makes it easy for everyone to share their docker images.

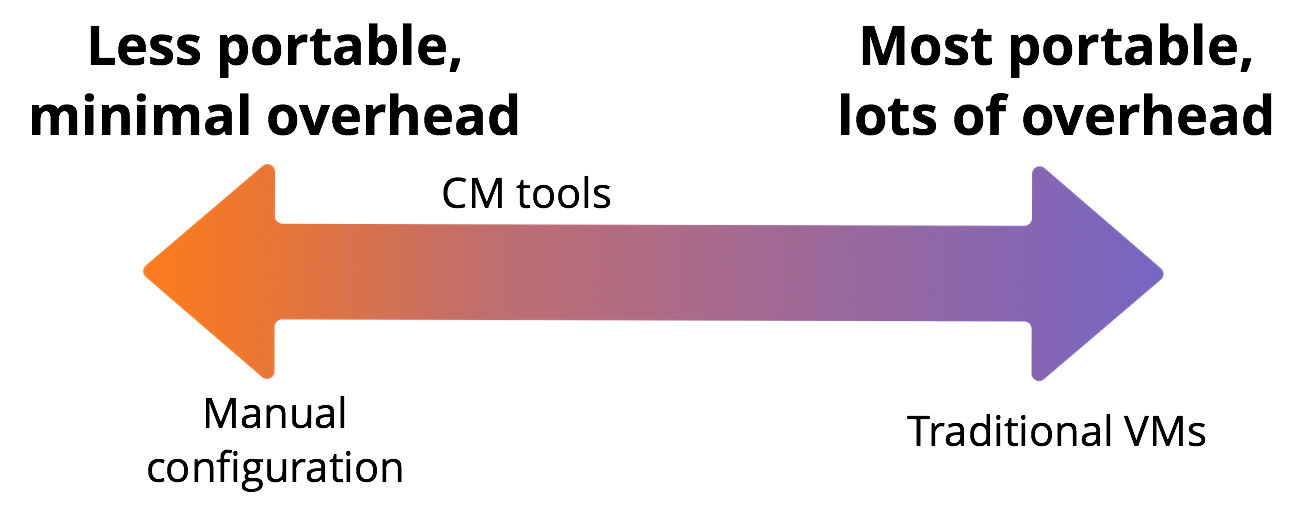

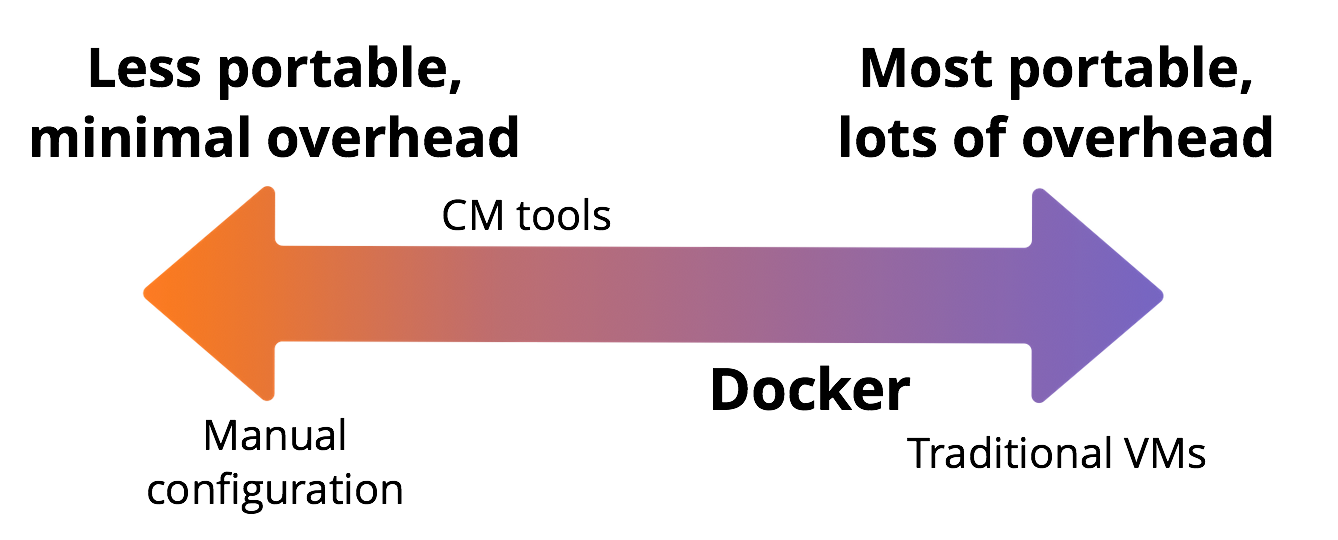

Software Deployment Tools

This is a spectrum of what it takes to deploy your software to the server. The least portable, but with minimal overhead, method is through manual configuration. An example of a manual configuration would be to start up a new EC2 on Amazon Web Services, Secure Shell (SSH) into it, run all the individual commands you’d need to install the right packages to run your software application, etc. With manual configuration you can have a script of commands you run, and when you’re done the server will be configured exactly the way you want. However, it’s very difficult to take the configuration to a new server, and you’ll have to do everything all over again if you switch servers.

Next on the spectrum is the Configuration Management (CM) Tools such as Puppet, Chef, or Ansible. For the last few years, this has been the preferred way to configure your server. You’d have your software application, and then you’d also write some additional code for configuration using one of the CM tools. Writing the configuration code makes your software app a bit more portable, because the next time you start up a new server, you won’t need to manually SSH into it or remember all the commands you wrote. Instead, you can just tell the CM tool to go ahead and configure the application exactly the way you’ve specified. It still takes time for all those commands to run on a new server, but at least you don’t have to do it manually.

Jumping all the way to the right hand side of the spectrum is where traditional Virtual Machines (VMs) come in. It’s the most portable way to deploy software, but it also has a lot of overhead. Many organizations will use amazon machine images, for example, to deploy their server to the cloud if they’re running on Amazon Web Services (AWS), and this is a great way to ensure the software you configure in your build pipeline on your development machine will behave exactly the way you’ve expected in production. However, these Amazon Machine Images are full exports of virtual appliances, which means they’re really big and take a long time to build and move around.

I like to think Docker occupies a sweet spot on this spectrum, where you most of the isolation of Virtual Machines at a fraction of the computing cost.

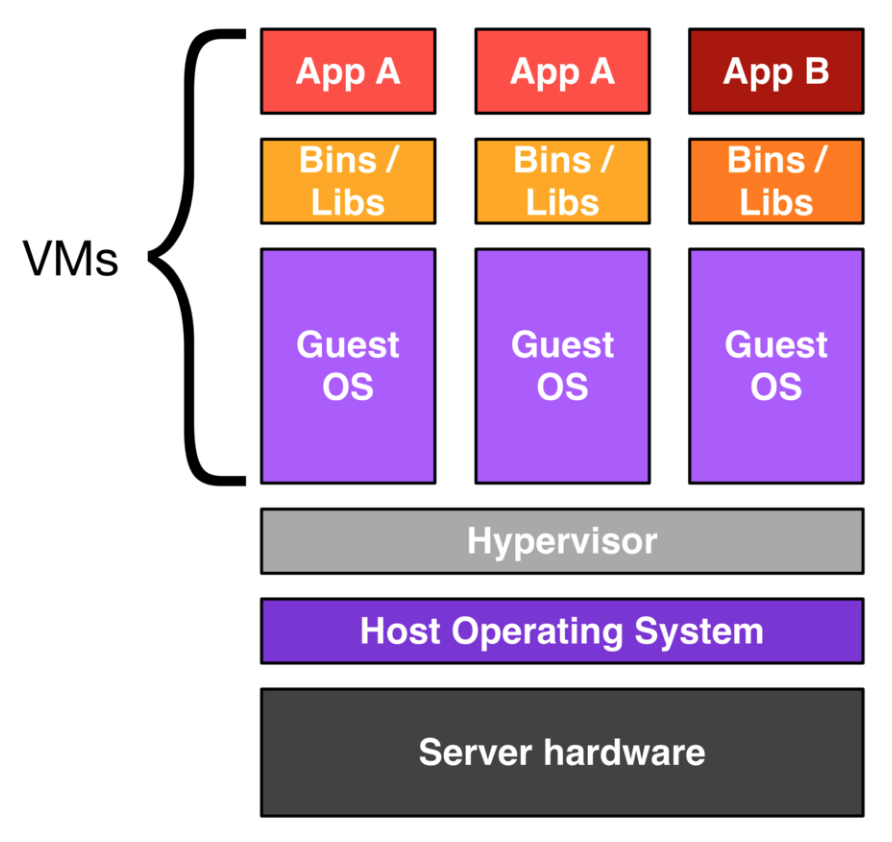

Virtual Machines vs Docker

To expound on what I mean by how Docker allows you to get most benefits of VMs, let’s take a look at how they work.

This is a diagram of how a traditional VM setup for running software applications is configured. The Hypervisor is the layer of your stack that’s doing the actual virtualization, in which it takes computing resources from the Host Operating System and use them to create fake virtual hardware that will be then consumed by Guest Operating Systems. Once you have the guest OSs installed, you can actually install your software applications, binaries, and libraries supported.

VMs are great for providing complete isolation of the Host OS, where if something goes wrong in an application or your guest OS, it won’t impact the host OS or screw up the other guest OSs. However, all this comes at a great cost, because ultimately the server that’s running the stack has to pay a huge amount of computing resources to the virtual app.

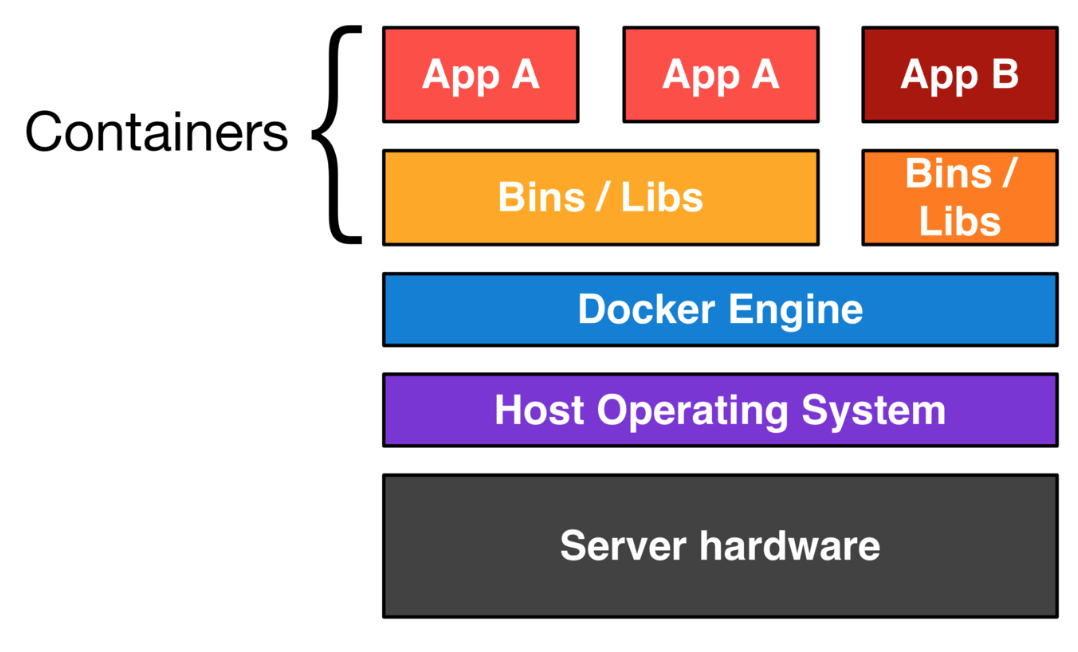

With Docker, you’d have a thinner stack:

The sort of rule of thumb in the Docker world is to have one Docker container running on your server for each process in your stack. This isn’t a hard rule, but it’s a good one for people who are just starting out.

My own background is mostly working with Python, so the diagram above was a stack I was deploying on the server. The first red box would be a container in Nginx for a reverse-proxy and load balancer, the green boxes would be the web application server such as green unicorn, and then the blue box would some sort of database like a Postgres or MySQL server. The Docker engine provides the virtual environment for all of these without having to use computing resources to duplicate virtual hardware in guest OSs.

Basic Docker Vocabulary

My general advice is that images, containers, and docker files are kind of hard concepts to understand until you really dig in and try using Docker yourself. If you go through the Official Docker Tutorial on Docker’s website, you’ll get a taste of it, and there are also a lot of resources out there to get your hands on it. For me, when I was first using Docker, it was really difficult for me to understand what was the way I should be thinking about containers until I actually tried to do it myself with some of my own software.

Nonetheless, here is a quick overview of some basic Docker vocabulary.

Containers

In the Docker world, containers are what your applications run in, whether on your local development machine or on a server in the cloud. In other words, a container is how you run your software in Docker. You can read more about containers at the Docker website.

The basic way to start a container would be through the command docer run [OPTIONS] IMAGE [COMMAND] [ARGUMENT]. An example would be:

$ docker run busybox /bin/echo "hello world"

hello world

In the example above, I’m running the sample image busybox, and I’m feeding it the command echo "hello world". When Docker receives this command, it will start a new container based on that image, feed it the command, and then does the work necessary to execute that command. In this case, it just returns hello world to our command line.

Extra: If I run a Container for a Database, how will the database’s contents be saved across reboots?

Running dockerized databases is something people have had to think a lot about. One of the best practices people seem to be settling on is using what people are calling a “database container”. So, when I run my Postgres database for my websites, I’ll normally have one Docker container that is actually running the Postgres database, and I’ll have another container that is just storing the data – it’s not actually doing any of the database operations. In that way, you kind of have a separate docker container that has the data you can somewhat control and move around more easily, while the actual code and configuration of running your database is in another container. Usually the containers are built off the same image, but the data container seems to be what gives people the most flexibility right now. However, certainly if you do multi-host and master-slave kind of stuff, I think we’re still trying to wait for some best practices to shake out for containers and databases there.

Images

Images are just saved states of containers.

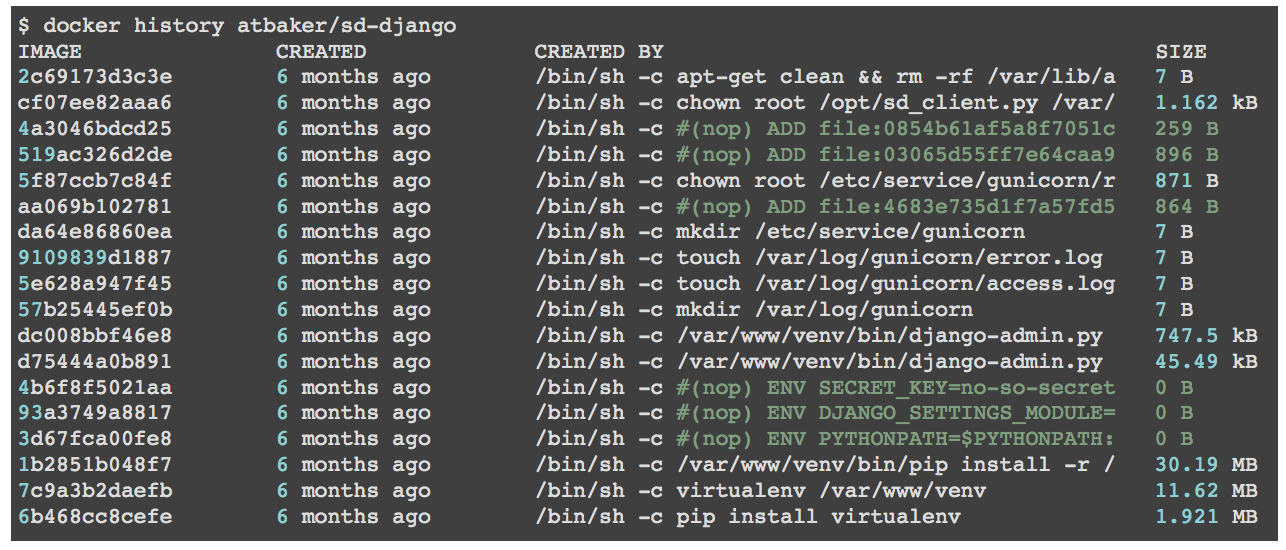

This output here is from the Docker history command, and it show you every command that was used to make each layer in a Docker image. You can see the first one was an apt-get clean command, and then there were some chown commands, and we were making directories, etc. Images are the way you save the state of the container so you can take it some place else.

Dockerfiles (builder)

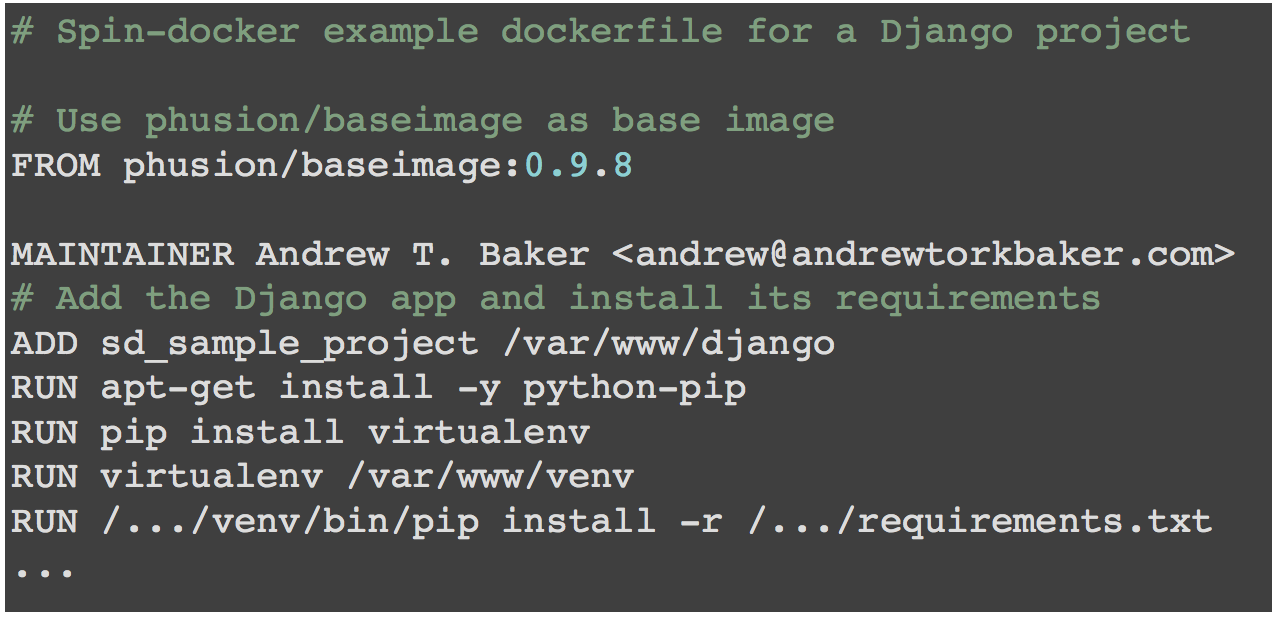

Another concept of Docker is the idea of Dockerfiles, or you might sometimes hear it referred to as the Docker builder. Dockerfiles are just a series of commands to build an image quickly, and they’re sort of your replacement for what would be a Configuration Management script to run different commands to configure all the servers and processes you need.

You’ll start off with a base image, and it will almost be as easy and simple as writing a shell script. If you go through the Official Docker Tutorial, you’ll get a lot more hands-on experience with all these concepts, so don’t worry if this doesn’t make total sense to you right now. Perhaps it would be easier to understand if we compared Docker with Git or any sort of version control software:

Version control software will help you run and deploy saved states of your source code, while Docker is sort of doing the same thing, except for your infrastructure/server states.

What are People Doing with Docker?

Isolated Development Environments with Docker

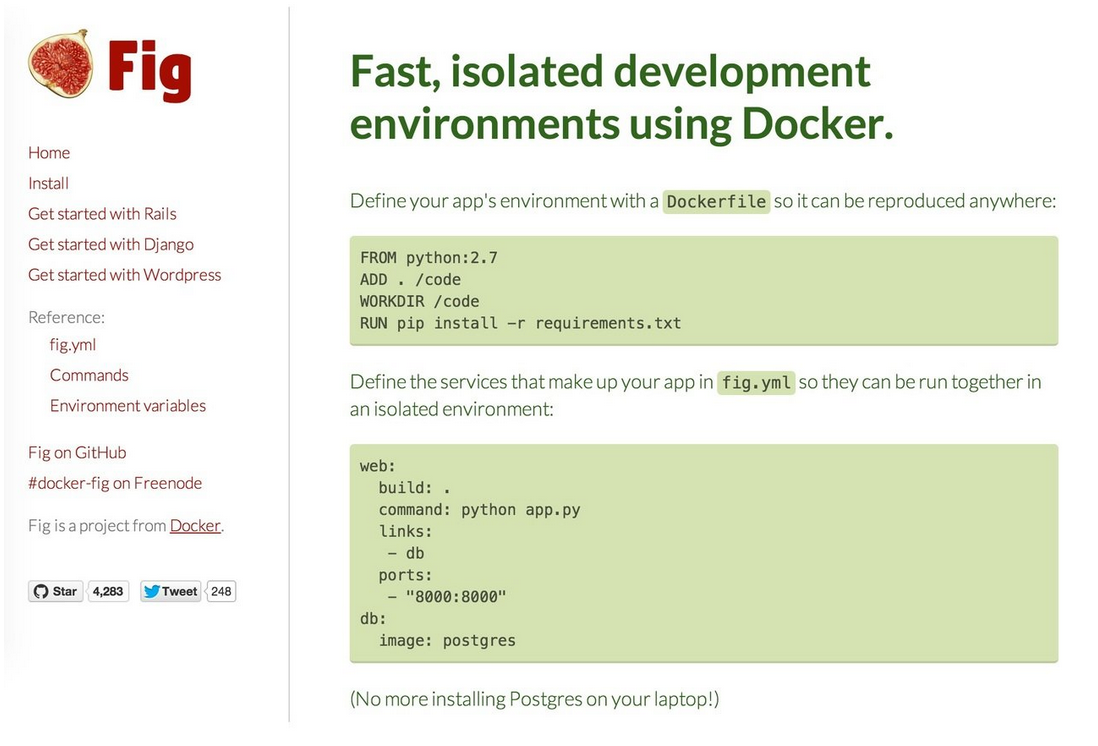

Fig is a very popular open source project that came out early with Docker, and it’s a nice little utility that makes it easier to run multiple Docker containers at once. It’s pretty lightweight, which makes it a good fit for developer environments. If you go to Fig’s website, you can see lots of great examples.

The example above is a Fig configuration file that defines a web service and database service. The web service is the custom application code that you’d be running in your web app, and the database service is a Postgres database, and you won’t have to install Postgres on your laptop for it.

Fig is my favorite tool and is a big reason I like to use Docker for development, since it makes it really easy for me to get started. If I need a Node.js component for my stack, or Postgres, MySQL, Nginx, etc, I can get all these up and running on my local environment without having to install all these natively on my Mac or Windows machine, so it is so much easier to replicate the full stack you run on production or on your development environment.

Application Platforms

Dokku is sort of a Docker-specific mini-Heroku without a whole lot of effort, and it’s written in less than 100 lines of bash. If you prefer more mature projects for running really serious and large scale PaaS, there are two other projects to check out – Flynn and Deis.

I think this is a really cool application of Docker because I’ve been in organizations before where there’s a large dev team and maybe a large systems operations team, and the dev team always wants to spin up new servers so they can have a demo to show clients, for example. Managing all those demo servers has always been a challenge for the systems team, not to mention the servers won’t last forever. So, if you’re in an organization that needs to spin up demo servers a lot, maybe using one of these open source projects to set up your internal PaaS would be a good solution.

Continuous Integration

Continuous integration (CI) is another big area for Docker. Traditionally, CI services have used VMs to create the isolation you need to fully test a software app. Docker’s containers let you do this without spending too much resources, which means your CI and your build pipeline can move more quickly.

Drone.io is a Docker-specific CI service, but all the big CI players have Docker integration anyway, including Jenkins, so it wll be easy to find and incorporate Docker into your process.

Educational Sandbox

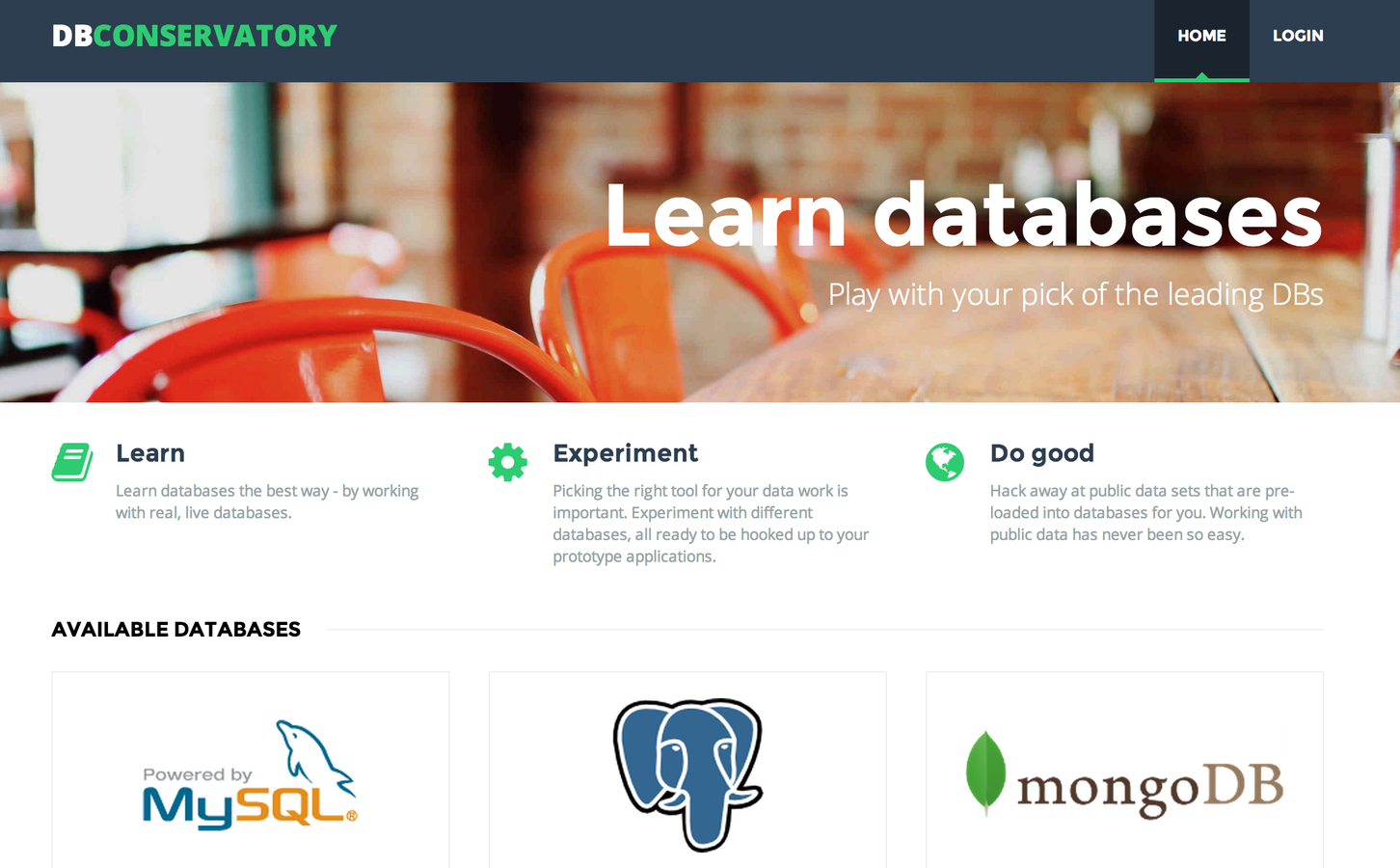

When I was learning docker, I built the site called DBConservatory, since I would sometimes run internal courses in my company about helping people learn the basics of SQL and databases so developers who have been using object-relational mappers for most of their careers would have a chance to dig a little deeper and see how things work behind the scenes. I wanted a service that would let me spin up a database for someone to learn quickly and use the database as much as I want, and also for the database to disappear at the end so I wouldn’t have to worry about it.

There was another open source Docker project really early on that focused on helping people easily learn application environments and programming language environments. Thus, if you were trying to learn Python, Node, or Ruby and didn’t want to go through the hassle of setting things up on your local development environment machine, there’s probably a Docker project out there that will help you do this. We’ll see more similar projects as Docker keeps permeating the industry.

What Developers are Using Docker for

I’m a developer as well, so I sort of gave hints earlier, but one of the reasons I really like Docker is that it’s so easy to create an environment on your local machine that really, closely mirrors what’s running on production. You get the most benefit out of using Docker for local development if your production server is also using the same containers you do your deployments in.

Even if you’re using something like the Django server or Flask dev server, it’s easy to get things up and running, since you don’t have to worry about whether your cache is wired up, or if your Nginx or reverse proxy isn’t running. It’s even better when you use Fig, since you can get all these components running on your local development machine.

Altogether, for me the key benefit of using Docker as a developer is that you sort of more faithful environment in your development situation compared to that of your organization’s production. For more information about the benefits of Docker, you can refer to the post: Why 2015 is a great year to learn Docker.

Thanks! quite clear