Understanding Amazon’s Alexa and Building Alexa Skill

Alexa, Amazon’s cloud based voice service, powers voice experiences on millions of devices, including Amazon Echo, Echo Dot, Amazon Tab, and Fire TV devices. Alexa provides capabilities or skills that enable customers to interact with devices in a more intuitive way using voice.

Examples of skills include the ability to play music, answer general questions set an alarm or timer, and more. The Alexa Skill Kit (ASK) is a collection of self service APIs, tools, documentation, and code samples that make it fast and easy for you to add skills to Alexa.

Alexa Skill:

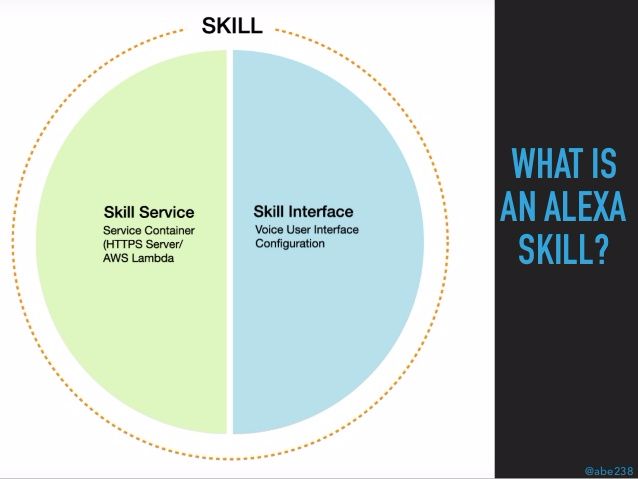

An Alexa skill consists of two main components:

- a skill service

- a skill interface.

As developers, we need to code skill services and configure the skill interface via Amazon’s Alexa skill developer portal. The interaction between the code on the skill service and the configuration on the skill interface results in a working "skill."

Let's try to understand each module in depth.

The Skill Service is the first component when you create a skill. The Skill Service lives in the cloud and hosts code that we will write. This is the code that will receive JSON payloads from Alexa.

In other words, the Skill Service is basically the business logic of the skill — it determines what actions to take in response to a user’s speech. The skill service layer manages HTTP requests, user accounts, information processing, sessions, and database access. All of these are configured in the skill service.

Building A Skill:

Let's now try to understand how to use Alexa Skills Kit (ASK) to build an Alexa skill. This skill is a voice driven application for Alexa. It's also known as the Greeter skill, which will say “Hello” to users when certain words are said. This skill would respond to user’s words with a greeting on any Amazon Echo or Alexa enabled device.

The Greeter skill being built is where the response “Hello” is generated and returned to an Alexa enabled device. A skill service can be implemented in any language that can be hosted on a HTTPS server and would return JSON responses.

We will implement the skill in Node.js and run on AWS Lambda, which is Amazon’s serverless compute platform.

For the HTTPS server, AWS Lambda is a good option because it can be a trusted event source, allowing the Alexa service to automatically communicate securely with AWS Lambda. It is possible to use your own HTTPS server, but to do so requires additional configuration, which would enable SSL, and a signed digital certificate. No additional configuration is required with AWS Lambda.

Node.js is a great option because it uses JavaScript, a language that AWS Lambda supports. This is great because both Node and JavaScript have very active developer communities and they're very convenient to develop in and debug.

Skill Service:

A skill service implements event handlers. These event handler methods define how the skill would behave when the user triggers the event by speaking to an Alexa enabled device.

We can define event handlers on the skill service to handle particular events like the OnLaunch event.

GreeterService.prototype.eventHandler.onLaunch = helloAlexaResponseFunction;

var helloAlexaResponseFunction = function(intent, session, response){

response.tell(SPEECH_OUTPUT);

}

The OnLaunch event would be sent to the Greeter skill service when the skill is first launched by the user. Users would trigger this skill by saying “Alexa, Open Greeter” or “ Alexa, Start Greeter”. Another type of handler a skill service can implement is called an intent handler.

var helloAlexaResponseFunction = functin(intent, session, response) {

response.tell(SPEECH_OUTPUT);

}

GreeterService.prototype.intentHandlers = {

“HelloAlexaIntent” : helloAlexaResponseFunction

}

An intent is a type of event. It would indicate something a user would like to do. In the basic Greeter skill, all we have is one type of Intent, saying “Hello.” In this case we call it the HelloAlexaIntent.

An intent handler maps the number of features or interactions a skill offers. A skill service can have many intent handlers, each reacting to different intents triggered by different spoken words which we developers specify.

Skill Interface:

The skill interface configuration is the second part of creating a skill.This is where we specify the words that would trigger intents of skill service defined above.

The skill interface is what is responsible for processing user’s spoken words. It handles the translation between audio from the user to events the skill service can handle. It sends events so the skill service can do its work. Ths skill interface is also where we specify what a skill is called so users can invoke it by name when talking to an Alexa enabled device,

for example, “Alexa, ask Greeter to say Hello"

It's the name users would use to address a skill. This is called the skill invocation name. For example, we are naming our skill Greeter.

Within the skill interface, we define the skills interaction model.

Interaction Model:

The interaction model is what trains the Skill Interface so that it knows how to listen to user's spoken words. It resolves the spoken words into specific intent events. You define the words that should map to particular intent names in the interaction model by providing a list of sample utterances. A sample utterance is a string that represents possible ways a user may talk to the skill. These utterances are used to generate a natural language understanding model. This resolve user’s voice to our skills intents.

Intent Schema:

We also declare an intent schema on the interaction model. An intent schema is a JSON structure which declares the set of intents a service can accept and process.

The intent schema tells the skill interface what intents the skill service implements. Once we provide the sample utterances, the skill interface can resolve the user’s spoken words to the specific events the skill service can handle. An example is the “Hello World” intent event in the skill we are going to build.

It has the following syntax:

{

"intents": [

{

"intent": "string",

"slots": [

{

"name": "string",

"type": "string"

},

{

"name": "string",

"type": "string"

}

]

}

]

}

We will provide both the sample utterances and the intent schema in the Alexa skill interface. When defining the sample utterances, consider the variety of ways the user might try to ask for an intent. A user might say, “Alexa, ask Greeter to say Hello”, or the user might also say “Alexa, ask Greeter to say Hi”. Therefore, you should provide a comprehensive list of sample utterances to the interaction model. This will make the user experience smoother by increasing the chances of a match.

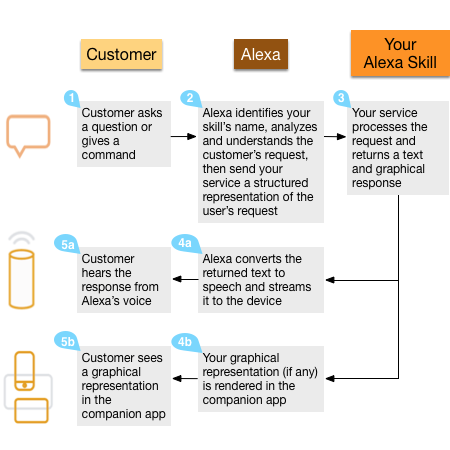

After having set up the skill interface with sample utterances to recognize voice patterns to match our skill services intents, the fourth journey of the request between skill interface and skill service can take place.

Example of a User Interaction Flow

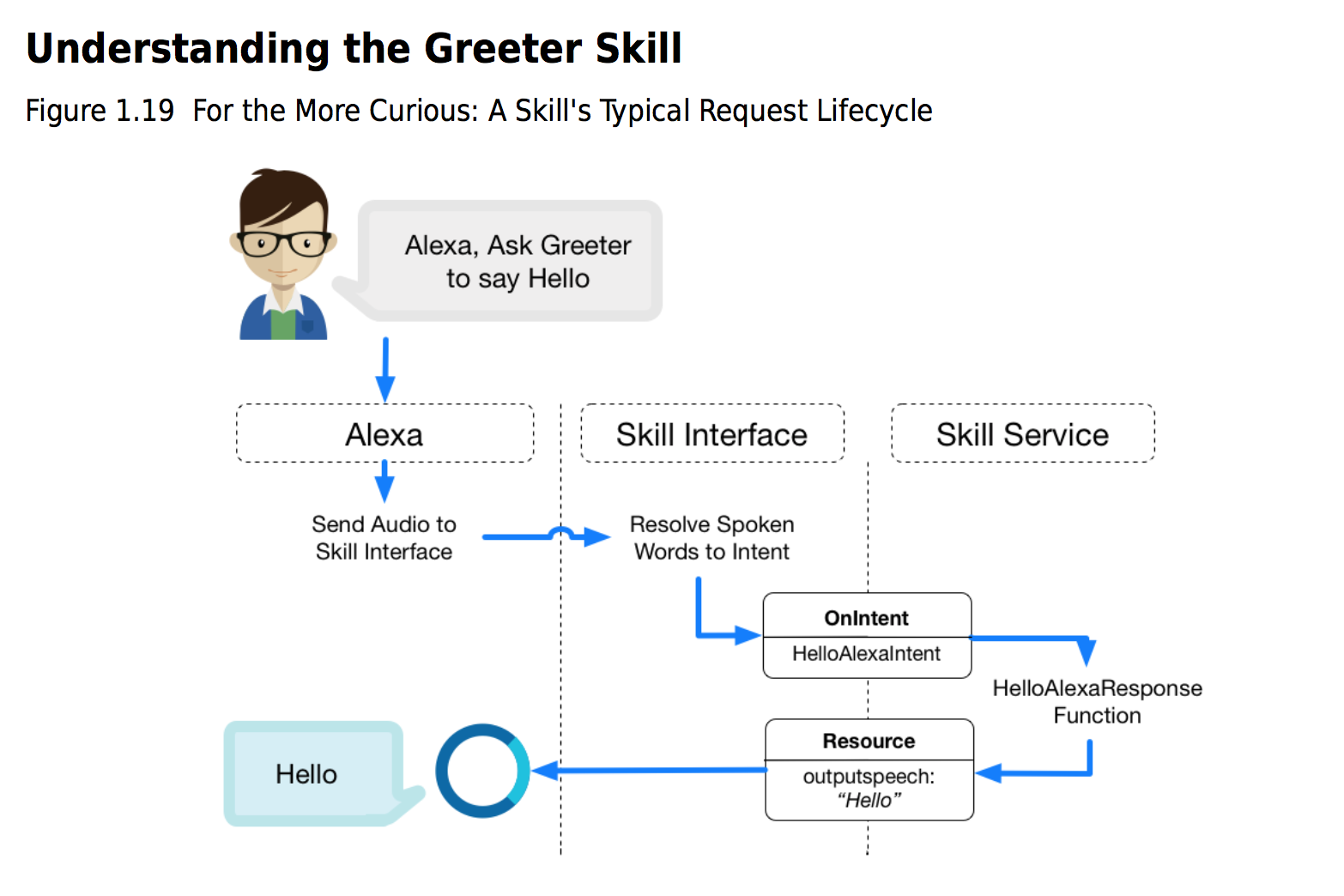

Here is how the user’s spoken words are processed by the Greeter skill.

The user says, “Alexa, ask Greeter to say Hello”, the skill interface resolves the audio to an intent event because we can figure the interaction model. We set up an invocation name as Greeter and we provide the interaction model with sample utterances in the skill interface. The sample utterances list include “Say Hello”.

So the skill interface was able to match the user’s spoken words to the intent name. Now that the event is recognized by the skill interface it is send to the Skill Service and the matching intent handler is triggered. The intent handler returns an output speech response of “Hello” to the skill interface which is then sent to an Alexa device. Finally the device speaks the response.

Summary & Resources

An Alexa skill is made up of a skill interface and a skill service. The skill interface configuration defines how a verbal command is resolved to events, which is then routed to its skill service. This way, with an Alexa skill you will write a skill service, configure a skill interface, test and deploy the skill.

I hope this article helps you understand the architecture of an Alexa skill and how it is developed.

If you want to learn about how you can actually create your own Alexa skill, read this series: